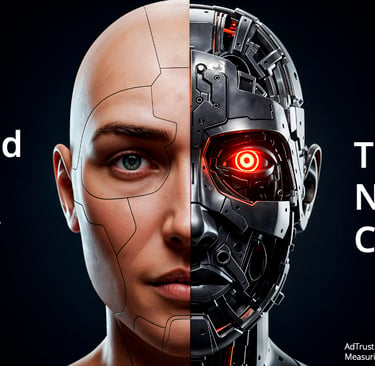

When AI dilutes the truth, brand safety becomes a democratic issue

The explosion of content generated by artificial intelligence is no longer a trend, it's a game changer. Photorealistic images, fake testimonials, mass-produced product reviews, deepfake videos, automated articles, orchestrated comments: the ability to produce credible content on a large scale has never been so accessible. At the same time, social media platforms have gradually reinforced a doctrine of maximum freedom of expression, particularly in the United States, where regulation is based more on individual responsibility than on editorial moderation. As a result, all content tends to coexist on the same level of visibility in social feeds. Verified information, personal opinion, advertising, satire, manipulation, fake reviews, deepfakes: the algorithm arbitrates attention, not truthfulness. For brands, this raises a simple question: where is brand safety now?

Stéphane LE BRETON

2/15/20267 min read

Explosion of fake reviews: regulation intensifies

For years, fake reviews were treated as a minor annoyance. A technical problem. An isolated incident.

In 2026, this is no longer the case. Authorities have realized that consumer reviews are no longer just a marketing tool. They have a direct influence:

purchasing decisions,

brand reputation,

trust in platforms,

and, more broadly, market integrity.

In the United States: the FTC cracks down

The Federal Trade Commission has adopted a specific rule targeting fake reviews and misleading testimonials. It now explicitly prohibits:

the creation or sale of fake reviews,

the use of artificially generated reviews,

undisclosed testimonials from employees or partners,

the selective removal of negative reviews.

Penalties can exceed $50,000 per violation.

This is not a cosmetic adjustment, it is official recognition of a simple fact: the manipulation of reviews has become a systemic threat. And the massive influx of AI-generated content only amplifies the risk.

United Kingdom: fines proportional to turnover

In the United Kingdom, the Digital Markets, Competition and Consumers Act significantly strengthens the powers of the Competition and Markets Authority.

Companies that publish or facilitate misleading reviews can now be fined up to 10% of their global revenue. The message is clear: the accuracy of reviews is no longer a matter of image. It is a matter of structural compliance.

Within the EU: growing pressure for transparency

The European Union, through the Digital Services Act (DSA), requires large platforms to :

greater transparency regarding recommendation systems,

enhanced moderation requirements,

increased liability for the dissemination of misleading content.

In a context of explosive growth in AI-generated content, these requirements aim to prevent fake content from becoming commonplace.

The real issue: trust is becoming regulated

This regulatory tightening reflects a profound change. For years, trust was a competitive advantage. Today, it has become a legal requirement.

Because the proliferation of fake reviews, deepfakes, and synthetic content makes authenticity rarer—and therefore more strategic..

The more unstable the information environment becomes, the more the value of verified environments increases.

A paradoxical tension

What makes the situation particularly striking is the discrepancy:

Regulators are stepping up protection against fake reviews.

Consumers are demanding greater authenticity.

Brands know that social proof is decisive.

And yet, advertising investments continue to be concentrated in environments where the mass production of AI-generated content makes verification more complex.

Regulation sends a clear signal: the battle is no longer just about attention. It's about credibility.

Consumers demand authenticity and transparency

If regulation is intensifying, it is not solely at the instigation of the authorities. It is primarily because consumers have changed.

In an environment saturated with sponsored content, subtle placements, automated reviews, and algorithmic recommendations, vigilance has increased.

Audiences don't reject advertising, they reject opacity. According to Nielsen's Global Trust in Advertising Study, nearly 88% of consumers say they trust recommendations from people they know, and more than 70% trust online reviews when they are perceived as authentic.

This figure is fundamental: social proof remains one of the most powerful drivers of trust in contemporary marketing.

But this trust is no longer blind.

Conditional trust

The BrightLocal – Local Consumer Review Survey 2023 shows that:

82% of consumers say they have identified fake online reviews.

A majority say that even a hint of manipulation is enough to significantly damage the perception of a brand.

In other words: consumers want reviews—but they know the ecosystem can be manipulated.

This tension creates a new implicit standard:

The evidence must be visible.

The origin must be identifiable.

The context must be credible.

The process must be transparent.

Without these elements, the opinion ceases to be a factor of confidence and becomes a factor of doubt.

The post-naivety era

We have entered a post-naivety era. Consumers know that:

Reviews can be bought,

influencers can be paid,

ratings can be manipulated,

comments can be generated by AI..

This lucidity does not lead to systematic rejection. It leads to an increased demand for transparency.

Audiences accept advertising. They accept reviews. They even accept commercial partnerships.

What they no longer accept is concealment.

Authenticity as a competitive advantage

Dans ce contexte, l’authenticité cesse d’être un supplément d’âme. Elle devient un avantage compétitif.

A brand capable of demonstrating:

that its reviews come from real customers,

that its testimonials are verified,

that its evidence is traceable,

immediately reduces the level of cognitive friction.

Conversely, an environment where everything seems possible—false, exaggerated, amplified—generates information fatigue that penalizes even virtuous actors. Mistrust becomes widespread.

Strategic relocation

This change has a direct impact on the media and advertising. When authenticity becomes rare, environments that can offer the following become more valuable:

a clear framework,

editorial responsibility,

assumed moderation,

explicit verification,

The demand for authenticity is therefore not a marginal social phenomenon. It is a strategic variable that is redefining the hierarchy of advertising environments.

In a world where AI can generate thousands of credible reviews in a matter of seconds, the question is no longer simply, “Do consumers trust reviews?” But rather, “In what context, and under what guarantees, can this trust continue to be expressed in the long term?”

Attention and trust depend on the media context

Attention and trust do not exist in a vacuum. They are deeply influenced by the context in which a message is received.

The same advertising content does not have the same value depending on its surrounding environment. When broadcast in a saturated social feed, between AI-generated content, a controversial video, and a viral opinion, it is perceived as just one element among many—often indistinct, sometimes suspicious. When inserted into an editorialized, identified, and hierarchical media outlet, it benefits from a framework, implicit legitimacy, and a form of stability.

This phenomenon is not theoretical. Research conducted by FranceTV Publicité with Ipsos and Tobii on measuring attention shows that structured premium environments—particularly television—generate more seconds of attentive viewing than scrollable and skippable environments. Attention is less fragmented, less multitasking, and less defensive. It is more readily available.

But the issue goes beyond exposure time alone. The media context acts as a credibility filter. The media has legal, editorial, and institutional responsibilities. It selects, prioritizes, and verifies. This architecture creates a showcase effect: the advertising message is not isolated in an indistinct flow, but is integrated into a coherent whole.

Conversely, in environments where all content coexists on the same level—information, opinion, satire, fake reviews, deepfakes—the hierarchy disappears. The algorithm favors engagement, not reliability. Advertising is then exposed to the risk of contextual contamination: even if the message is rigorous, the context can undermine its perception.

In this case, trust no longer depends solely on the brand. It depends on the media that hosts it.

Studies by the Reuters Institute show that trust in information varies greatly depending on the channel through which it is disseminated. Established media outlets continue to be perceived as more reliable than content shared via social media platforms. This difference in perception is not insignificant: it influences how a commercial message is interpreted, remembered, and evaluated.

In other words, attention is measurable. Trust, on the other hand, is contextual.

And in an environment where AI makes the fake more credible and the real harder to distinguish, choosing the media context becomes a strategic act. It is no longer just a decision about coverage or performance. It is a decision about credibility.

While attention captures the gaze, context conditions interpretation. And it is this interpretation that ultimately determines the real impact of a message.

The flight of advertising investment: performance, but at what cost?

The growing concentration of advertising investment in digital platforms cannot be explained solely by technical or budgetary considerations. It is based on a promise of performance, real-time optimization, and granular targeting.

However, this dynamic is having a systemic effect. In France, traditional media—television, radio, and print—will account for only around 33% of the total advertising market in 2025, compared with 46% in 2021. Most of the growth is now being captured by the major global technology platforms.

This shift is not neutral. It changes:

the structure of information financing,

the balance of pluralism,

and the ability of national media to invest in demanding content.

It was precisely this observation that led Publicis Media to launch the Media Citizen initiative. The stated ambition is clear: to reconcile media performance and pluralism by gradually reallocating a portion of investments to editorial environments—press, television, cinema—considered essential to public debate.

The idea is strategic: advertising doesn't just fund impressions or clicks. It funds ecosystems.

In a context where so-called “traditional” media are struggling to maintain their economic balance, the issue is no longer just one of short-term ROI, but of the sustainability of the information model.

Brand safety: a risk that can now be measured

This observation ties in with another reality documented by brand safety studies.

According to analyses by Integral Ad Science (IAS), the presence of an advertisement near content deemed risky—misinformation, extreme discourse, artificially generated content—can lead to:

a significant decline in purchase intent,

a deterioration in brand perception,

and a decrease in advertising recall.

DoubleVerify studies also confirm that premium editorial environments score higher on brand suitability than open, unmoderated environments, and generate higher levels of consumer trust.

In other words: gross performance does not always compensate for contextual risk.

The more saturated the digital ecosystem becomes with AI-generated content, the more the ability to guarantee a reliable environment becomes a competitive advantage.

Strategic tension

The paradox is striking. On the one hand, platforms offer:

advanced optimization,

real-time metrics,

and unmatched targeting capabilities.

On the other hand, editorialized media provide

a framework,

legal accountability,

a hierarchy,

and increased protection against misinformation.

The Media Citizen initiative led by Publicis Media illustrates this tension: how can we reconcile the performance expected by advertisers with the need to support media environments that are fundamental to democracy?

The loss of advertising investment is therefore not just an economic problem. It raises questions about the model itself.

In a world where AI makes it impossible to distinguish between what is real and what is fake on a large scale, brand safety can no longer be reduced to a technical filter. It has become a strategic choice of context.

And this choice determines not only short-term advertising effectiveness, but also the long-term credibility of the ecosystem.

The issue is no longer just about protecting brands from risky environments. It is about protecting the public sphere from the erosion of truth.

Our commitment

Reviving trust in brand advertising through innovation.

CONTACt

info@buytryshare.com

+33 6 83 53 70 41

© 2025. SLB consulting. All rights reserved.